Introduction to Containers for High-Performance Computing (HPC)

An introductory session on using containers in HPC environments.

Overview

Duration: 1h30

- Learning Objectives

-

-

Understand the concept of containers and how they differ from traditional virtualization.

-

Explore containerization benefits for HPC workloads.

-

Introduce key container technologies: Docker, Singularity/Apptainer, and Podman.

-

Demonstrate how to use containers in an HPC workflow.

-

But first who are we and why are we here ?

Cemosis, the Center for Modeling and Simulation in Strasbourg, plays a critical role in advancing computational research through the development of Feel within the Feel Consortium. Here’s why containerization matters for Cemosis:

-

Enhanced Reproducibility:

-

With Feel++ being a sophisticated framework for finite element analysis, ensuring that every simulation runs in an identical environment is crucial for scientific validation. Containers guarantee that dependencies, libraries, and configurations remain consistent across all testing and production runs.

-

-

Simplified Collaboration:

-

The Feel++ Consortium involves collaboration among multiple institutions. Containers enable researchers at Cemosis and partner organizations to share a single, standardized environment, reducing setup complexities and streamlining collaborative development.

-

-

Optimized HPC Workflows:

-

HPC applications in modeling and simulation often require finely tuned performance optimizations. Containerization encapsulates these optimizations, ensuring that computational experiments run efficiently on various HPC systems without manual reconfiguration.

-

-

Rapid Deployment and Testing:

-

With containerized workflows, Cemosis can quickly iterate on new features or bug fixes in Feel++, automatically testing changes in a controlled environment. This accelerates development cycles and improves the overall quality of the software.

-

-

Future-Proofing Research:

-

As hardware and software evolve, maintaining reproducible environments becomes increasingly challenging. Containers provide a robust solution to preserve the computational environment, ensuring long-term reproducibility and sustainability of research outputs.

-

| This section emphasizes that containerization is not just a technical enhancement but a strategic enabler for research and collaboration at Cemosis. |

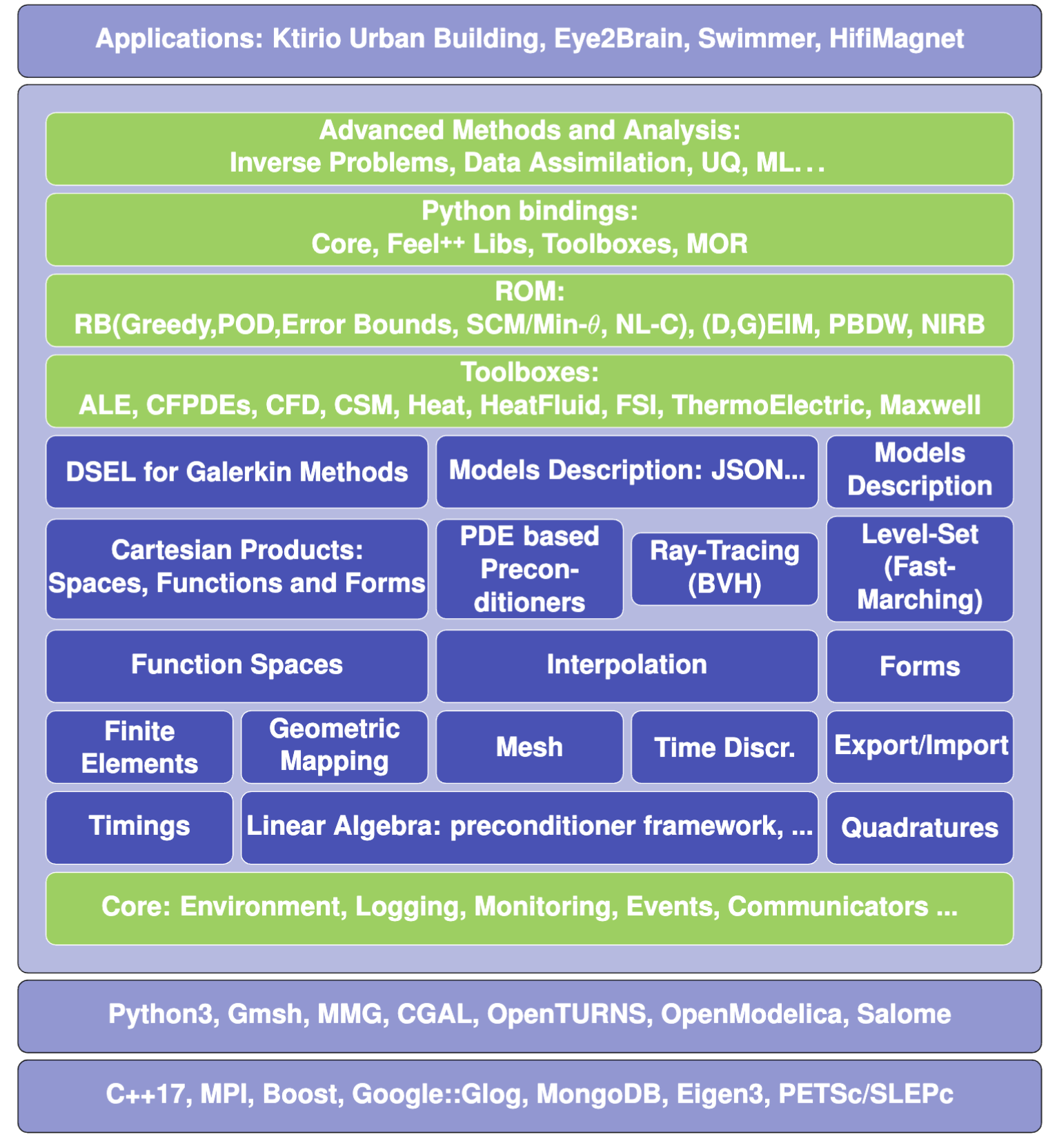

Feel++ Overview

-

Framework to solve problems based on ODE and PDE

-

C++17 and C++20

-

Python layer using Pybind11

-

Seamless parallelism with default communicator including ensemble runs

-

Powerful interpolation and integration operators working in parallel

-

Advanced Post-processing including for high order approximation and high order geometry

-

Build: CMake and CMake Presets

-

Docs: docs.feelpp.org including dynamic pages that can be downloaded as notebooks

-

DevOps:

-

GitHub Actions: CI/CD and Continuous Benmarking on inHouse and EuroHPC systems

-

Packaging: Ubuntu/Debian, spack, MacPort

-

Containers: Docker, Apptainer

-

-

Tests: About a thousand tests in sequential and parallel C++ and Python

-

Usage: Research, R&D, Teaching, Services

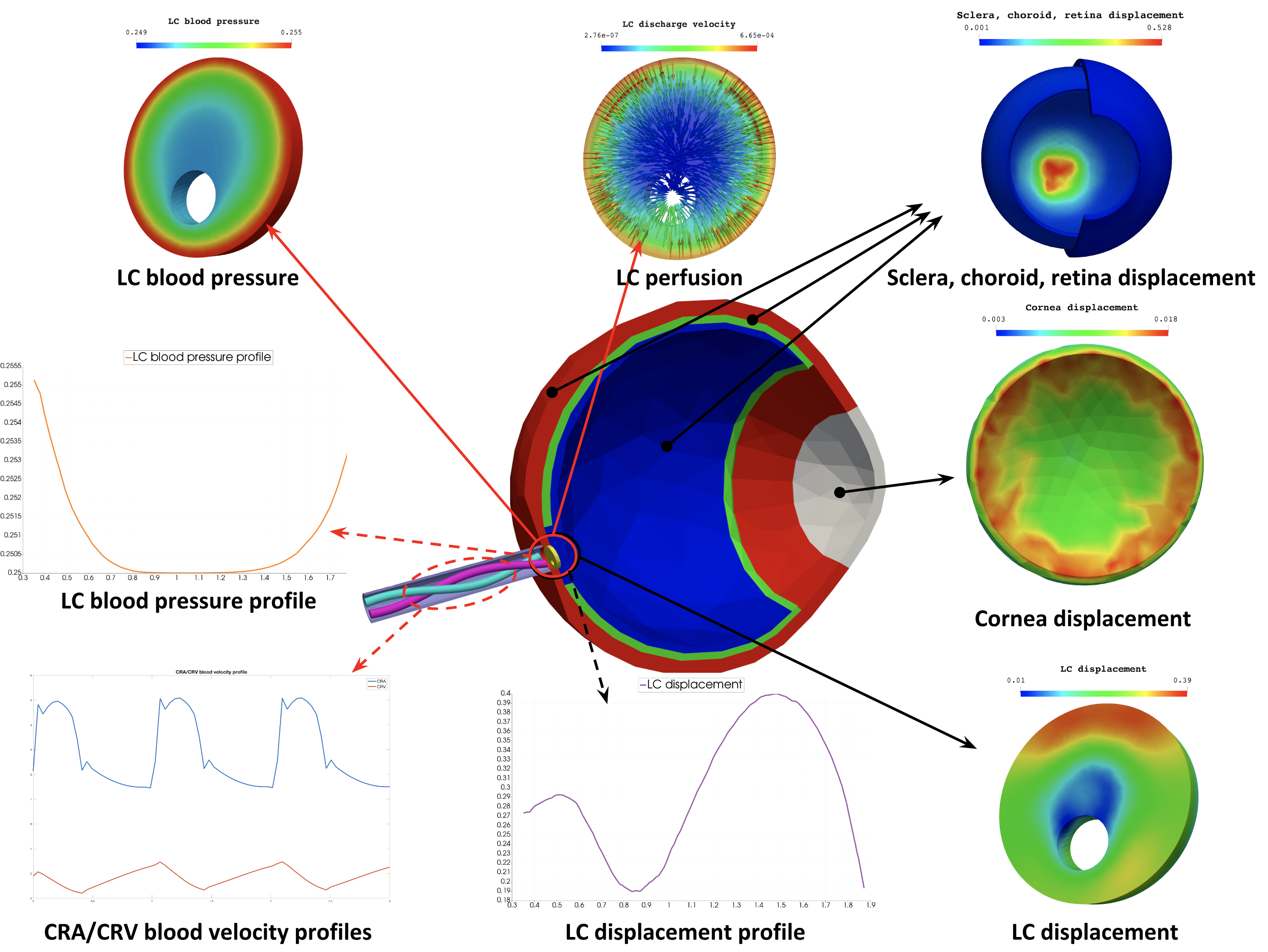

Some Feel++ Applications

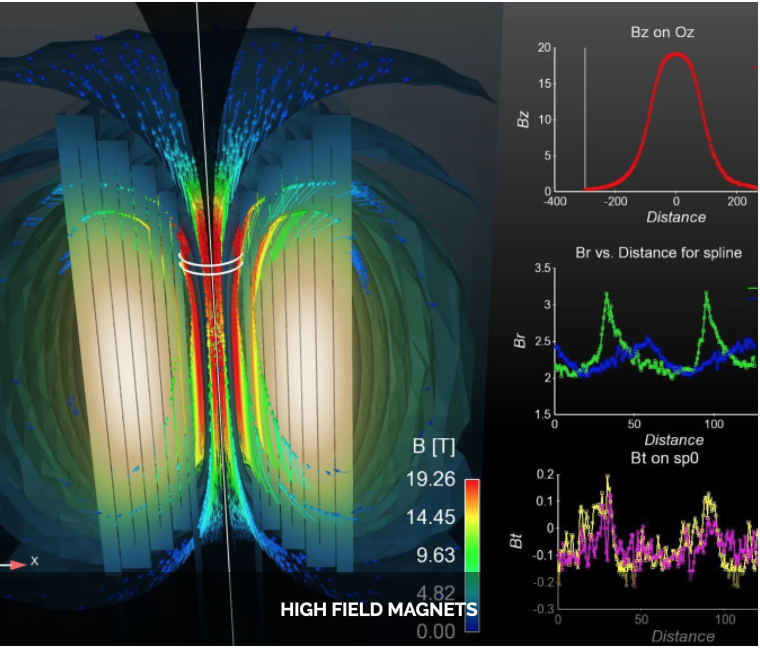

| Health(Rheology) | Physics(High Field Magnets) | Physics(Deflectometry) |

|---|---|---|

|

|

|

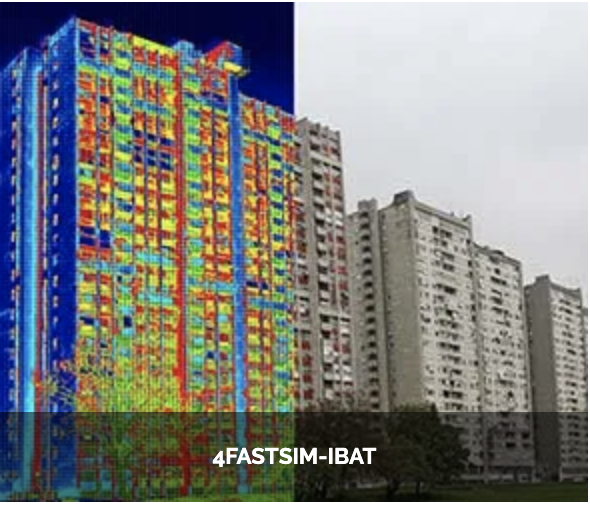

| Health(Micro swimmers) | Engineering (Buildings). | Health(Eye/Brain) |

|---|---|---|

|

|

|

Cemosis Projects

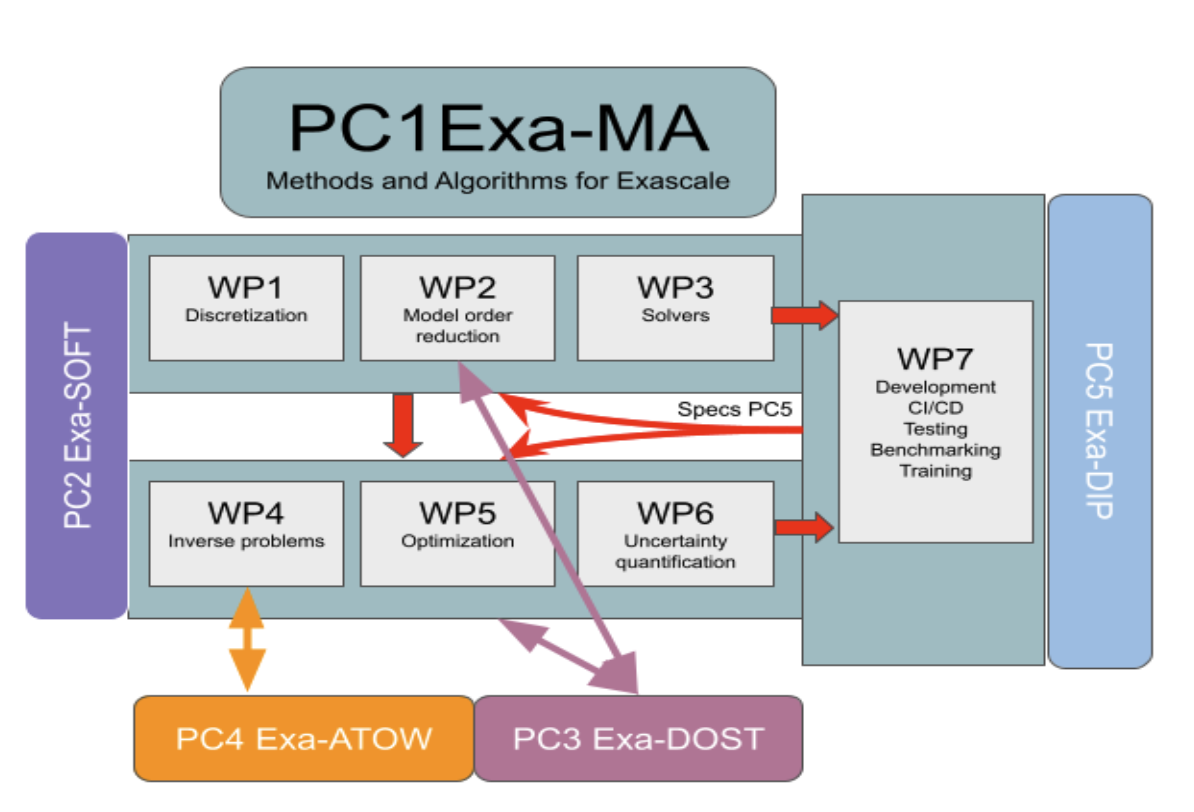

NumPEX and Exa-MA: Methods and Algorithms at Exascale

NumPEx is the french initiative for Exascale computing. Exa-MA is one of the projects of NumPEx

- Challenges

-

-

Enable extreme scale computing for vastly more accurate predictive models

-

Create digital copies of physical assets

-

Apply to environmental, health, energy, industrial and fundamental knowledge challenges

-

- Objectives

-

-

to develop methods, algorithms, and implementations that, taking advantage of the exascale architectures empower modeling, solving, assimilating model and data, optimizing and quantifying uncertainty, at levels that are unreachable at present

-

to develop and contribute to software libraries for the exascale software stack

-

to identify and co-design Methodological and Algorithmic Patterns at exascale

-

to enable AI algorithms to achieve performances at exascale

-

to provide demonstrators : mini-apps and proxy-apps openly available

-

to create, animate and foster a community around Exascale (and HPC) computing

-

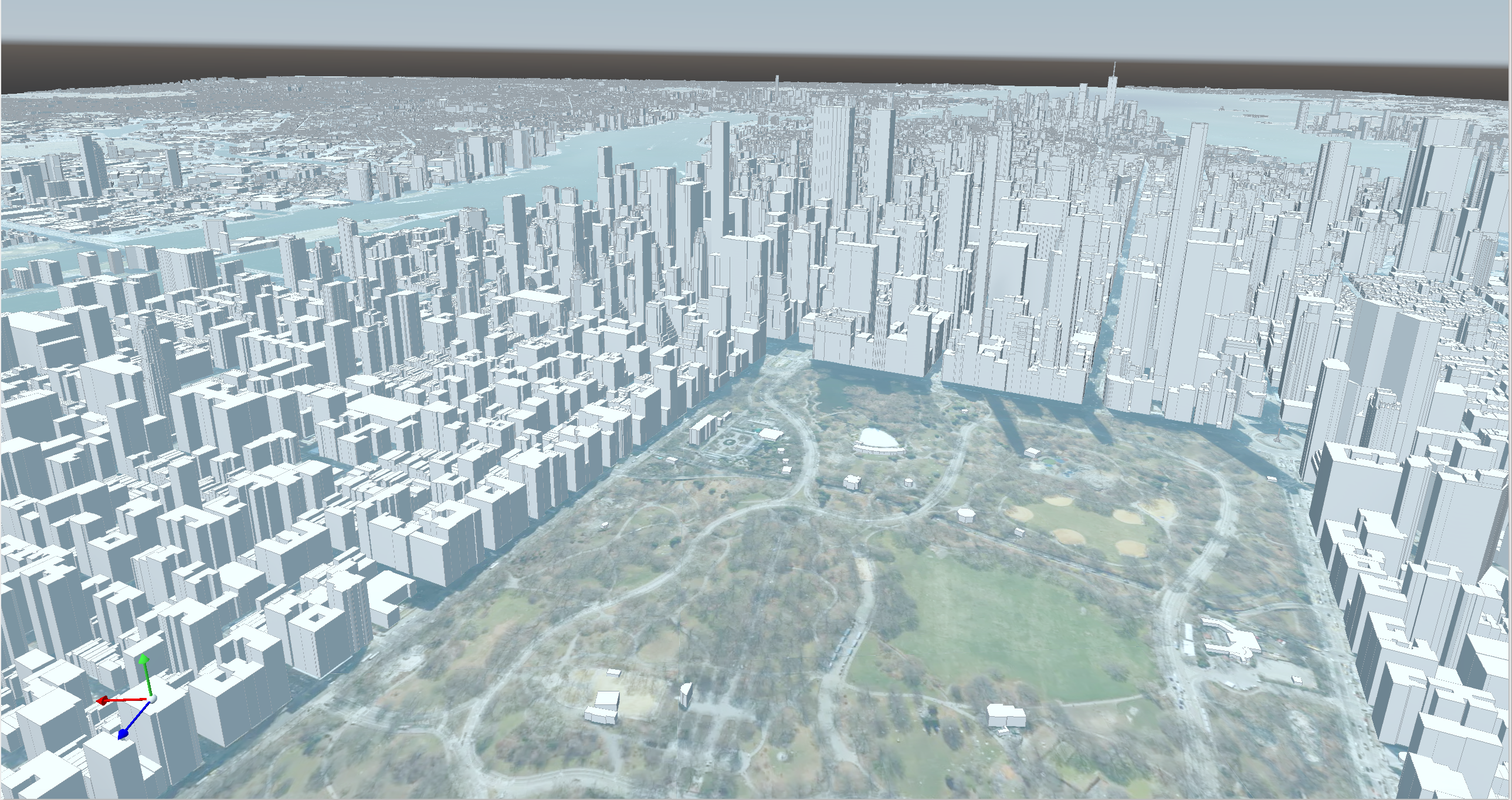

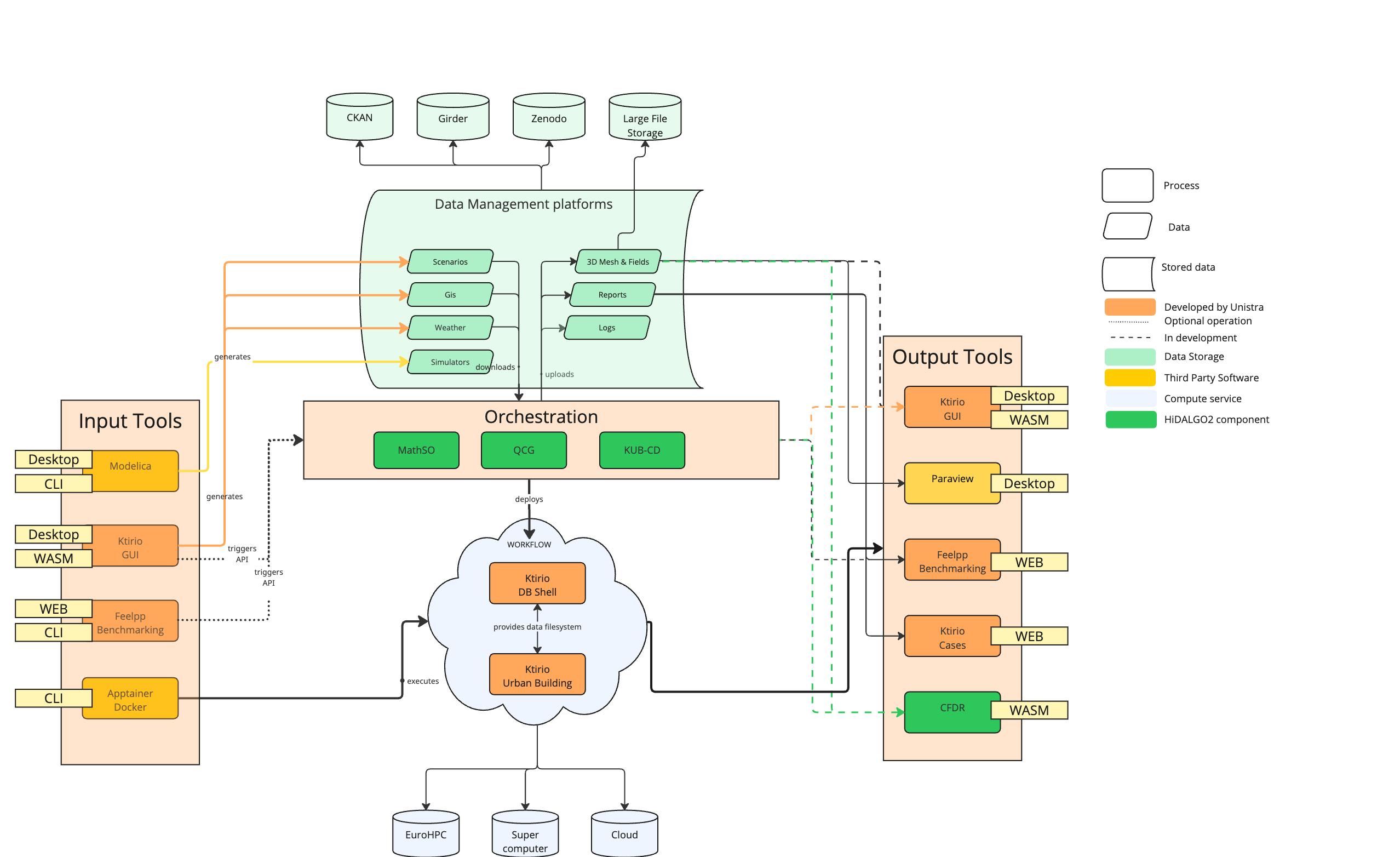

CoE Hidalgo2: Urban Building Pilot

In the Center of Excellence (CoE) Hidalgo2 we develop an urban building energy simulation pilot application.

Introduction to Containers

What are Containers?

-

Lightweight software encapsulating everything needed to run a specific task (minus the OS kernel).

-

Based on Linux only.

-

Processes and user-level software are isolated.

-

Portable software ecosystem.

-

Comparable to "chroot on steroids".

-

Docker is the most common tool:

-

Available on all major platforms.

-

Widely used in industry.

-

Integrated Dockerhub registry.

-

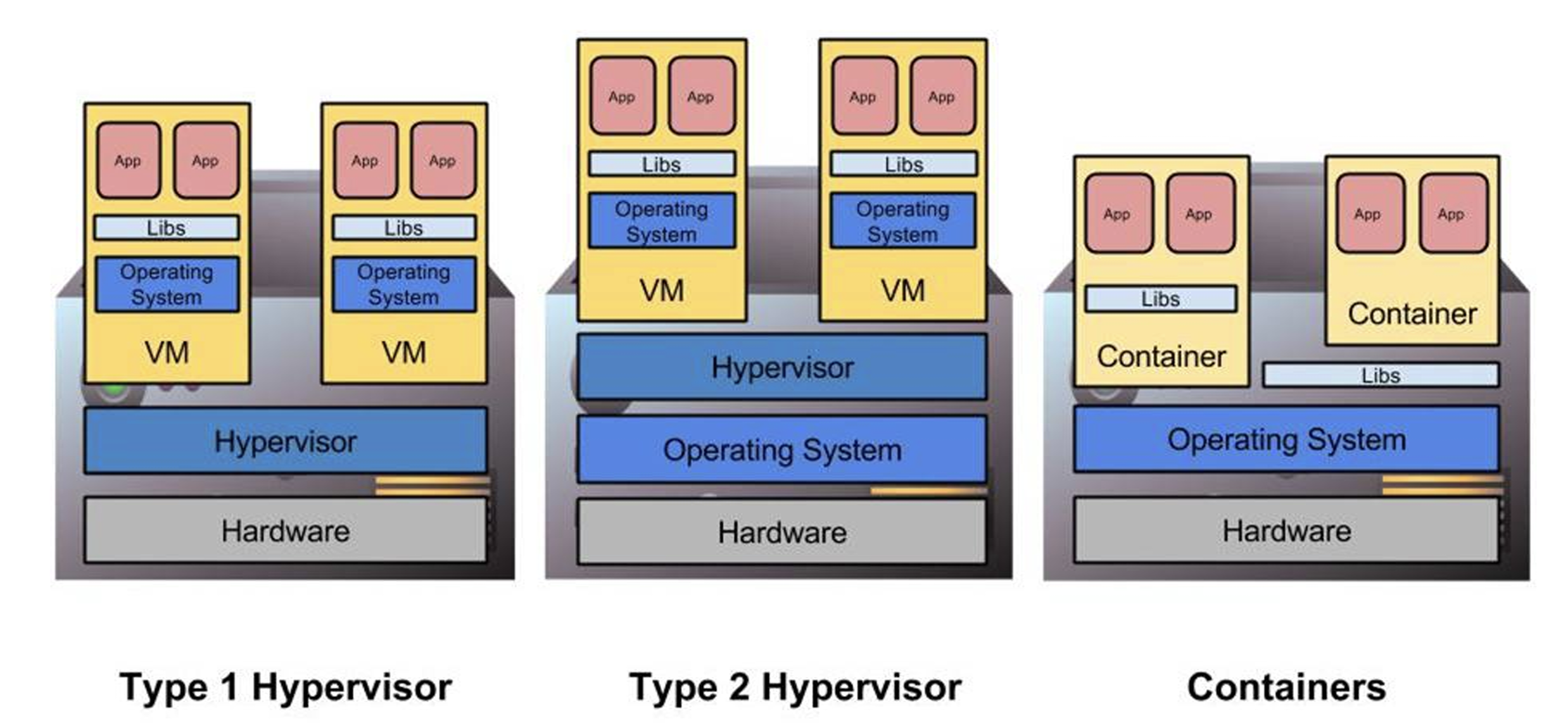

Hypervisors and Containers

-

Type 1 hypervisors run directly on the hardware, below the host OS.

-

Type 2 hypervisors operate within the host OS.

-

Containers function as an advanced chroot, bypassing traditional hardware abstraction.

-

The level at which abstraction occurs can impact performance.

-

All approaches facilitate custom software stacks on shared hardware.

Background of Virtualization

-

Advancements in virtualization have fundamentally transformed computing.

-

Prominent cloud platforms demonstrating these changes: FutureGrid, Magellan, Chameleon Cloud, and Hobbes.

-

Key distinctions in OS-level virtualization:

-

Containerized user-level applications for enhanced portability.

-

Shared OS kernel among containers secured through cgroups-based isolation.

-

Superior performance with some trade-offs in OS customization flexibility.

-

Why Containers for HPC?

HPC Software Deployment Challenges

Traditional HPC environments have long relied on centrally maintained software modules to manage complex dependencies. While this approach allows multiple users to share common libraries and tools, it comes with significant challenges:

- Complex Software Dependencies & Reproducibility

-

-

Module Conflicts: Users must navigate conflicting versions of compilers, libraries, and applications. This often leads to "dependency hell" where even minor version differences cause failures in compiling or running HPC codes.

-

Centralized Management: Since system administrators control the available modules, individual researchers may struggle to install custom or cutting-edge software versions tailored to their project needs.

-

Reproducibility Issues: Reproducing an experimental environment becomes difficult when software versions and configurations are managed outside of the user’s control. Small differences in the installed environment can lead to divergent results, hindering scientific validation and collaboration.

-

- Performance-Critical, Consistent Environments for Bulk-Synchronous MPI Workloads

-

-

Strict Synchronization: HPC applications, especially those using MPI, operate in bulk-synchronous phases where all nodes must coordinate their computation. Any inconsistency in the underlying software (like different MPI library versions) can cause synchronization issues or performance degradation.

-

Scalability Requirements: When scaling to thousands of nodes, even minor performance overheads become significant. Ensuring a consistent, highly optimized environment across all nodes is critical, as any variability can lead to bottlenecks or failure in achieving optimal performance.

-

Optimized Stacks: HPC workloads often rely on finely tuned communication libraries, hardware-specific optimizations, and custom drivers. Containers help by encapsulating these finely tuned environments, ensuring that every node uses the same software stack—thereby maintaining the performance characteristics required for large-scale, bulk-synchronous operations.

-

|

Containers help address HPC Hell

The HPC Environment Matrix from Hell

| HPC Software / Components | Laptop/Desktop (Development) | Tier-2 HPC Center | Tier-1 HPC Center | Tier-0 Supercomputer | Cloud Infrastructure |

|---|---|---|---|---|---|

HPC Code #1 stack (MPI-based) |

? |

? |

? |

? |

? |

HPC Code #2 (GPU-accelerated) |

? |

? |

? |

? |

? |

HPC Code #3 (AI/ML Pipeline) |

? |

? |

? |

? |

? |

HPC Code #4 (I/O-heavy Workflow) |

? |

? |

? |

? |

? |

HPC Libraries (BLAS, LAPACK, MKL, etc.) |

? |

? |

? |

? |

? |

Compilers & Toolchains (GCC, Intel, LLVM) |

? |

? |

? |

? |

? |

MPI Variants (Open MPI, MPICH, MVAPICH) |

? |

? |

? |

? |

? |

Performance Tuning & Profilers |

? |

? |

? |

? |

? |

| This table highlights the complexity of ensuring every component—MPI libraries, GPU drivers, compilers, file systems, security, etc.—matches across multiple HPC environments. Each “?” can represent different OS versions, library dependencies, hardware constraints, scheduler configurations, or security policies. Containers help encapsulate these dependencies, reducing the “matrix from hell” into a single, portable environment that can run consistently across laptops, Tier-n HPC clusters, and large supercomputers. |

HPC Environment Modules: Helpful but Still Broken

Environment modules (e.g., Environment Modules or Lmod) are widely used in HPC to manage complex software stacks. They allow users to load or unload specific versions of compilers, libraries, and applications by manipulating environment variables (e.g., PATH, LD_LIBRARY_PATH). While this approach has been the standard on many supercomputers for years, it still poses several problems:

- Lack of True Isolation

-

Modules only adjust environment variables, so if two modules conflict (e.g., different compiler versions), users must manually troubleshoot or re-order module loads. There is no guaranteed isolation of dependencies.

- luster-Specific Configurations

-

Each HPC center (and even each cluster within a center) may provide different module names, versions, or dependencies. A workflow that works on one cluster may fail on another due to missing or differently configured modules.

- Reproducibility Gaps

-

Because modules rely on the HPC system’s specific software installation, replicating an exact environment later or on a different system can be difficult. Minor changes in system-provided modules can invalidate previous runs.

- Complex Dependencies

-

Some scientific codes depend on intricate chains of libraries. Even if modules are available, loading them in the correct order (and verifying version compatibility) can become a “dependency puzzle,” making HPC usage less user-friendly.

- Administrative Overhead

-

Sysadmins must maintain a growing set of modules for different libraries, compilers, and versions. This is time-consuming, prone to error, and may lag behind the latest releases needed by researchers.

Why Containers Help

By contrast, containers bundle the entire user-space software stack (compilers, libraries, application code) into a portable image. This ensures:

-

Isolation & Consistency: The environment inside the container remains the same, regardless of the underlying HPC system.

-

Reproducibility: Scientific workflows become more easily reproducible, since the container image fully specifies dependencies.

-

Portability: A container built on a user’s laptop can run on a Tier-n cluster or supercomputer with minimal changes.

-

Reduced Admin Burden: Researchers control and update their containers without waiting for system administrators to provide new or specialized modules.

In summary, environment modules have helped manage HPC software complexity for many years, but they are not a complete solution to the challenges of portability, reproducibility, and dependency isolation. Containers can complement or even replace modules by providing a fully self-contained runtime environment, reducing “dependency hell” and simplifying cross-platform HPC workflows.

Benefits of Containerization for HPC

-

Reproducibility: Consistent, version-controlled environments.

-

Portability: Develop locally and deploy on supercomputers seamlessly.

-

User-space Environments: Run containers without requiring root access.

-

Scheduler Compatibility: Integrate with HPC schedulers (Slurm, PBS, etc.)

-

Compared with traditional software management (modules, environment scripts), containers offer a unified approach.

Container Technologies in HPC

Overview of Container Runtimes

-

Docker Strengths: A vast ecosystem, straightforward workflows, and seamless integration with many development tools. Limitations: Typically requires root privileges (via a daemon) and lacks native optimizations for HPC (e.g., multi-node MPI jobs, specialized network interconnects).

-

Singularity/Apptainer Focus: Specifically designed for HPC. Key Advantages: Runs containers without root privileges, integrates well with MPI and GPU workflows, and supports HPC schedulers.

-

Podman Rootless Docker Alternative: Allows building and running containers without a daemon, but less common in HPC settings.

-

Shifter and Charliecloud Additional HPC-Oriented Tools: Used at some HPC centers for containerized workflows.

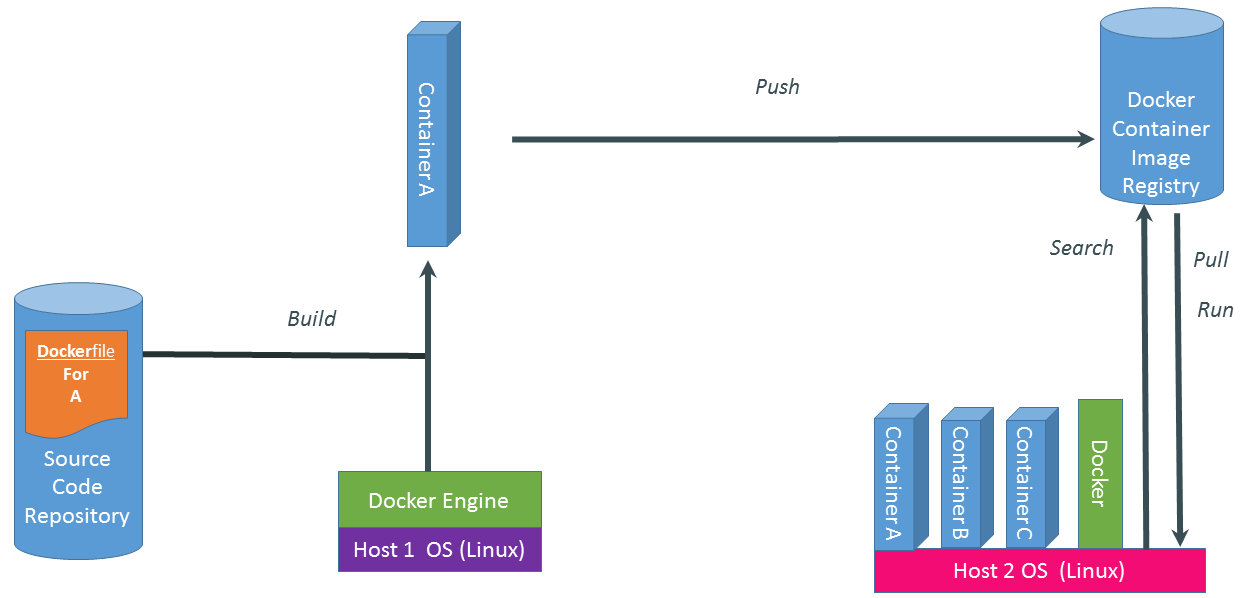

Docker in a Nutshell

Docker follows a client-server model:

-

The Docker Client sends commands (build, run, push, pull) to the Docker Daemon, which handles image management and container lifecycle.

-

Images are read-only templates with all dependencies needed to run your application.

-

Containers are live instances of images, isolated by Linux namespaces and cgroups.

-

A Dockerfile defines how to build an image (base OS, libraries, environment variables, etc.).

-

A Registry (e.g., Docker Hub) is a repository for storing and sharing images.

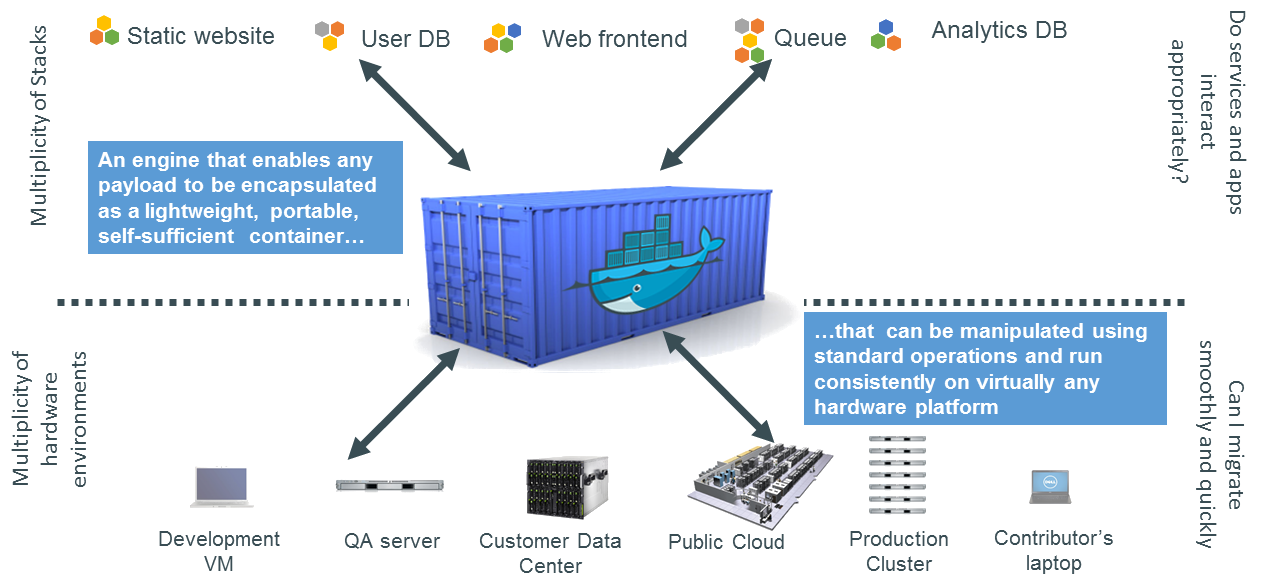

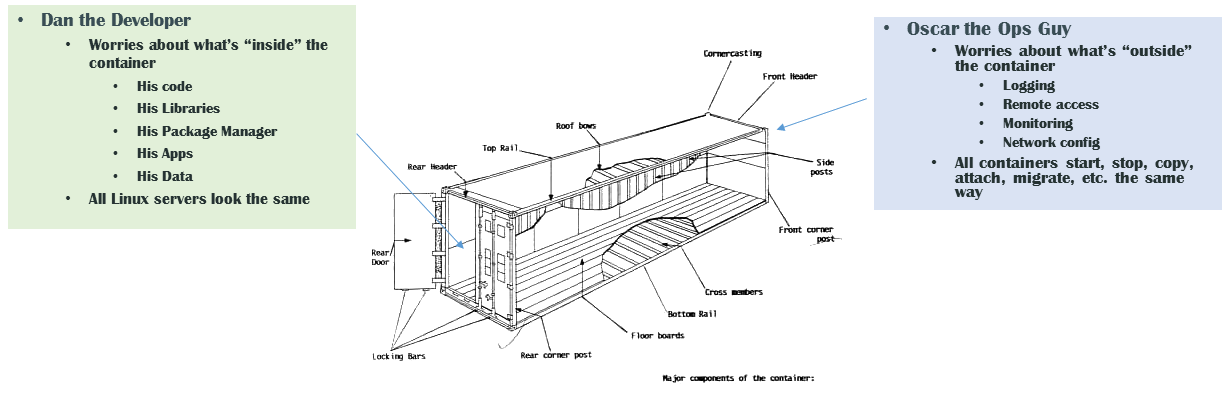

The Shipping Container Metaphor

Docker is often compared to shipping containers:

-

Developers (“inside” the container) focus on code, libraries, and configurations.

-

Operations (“outside” the container) handle logging, monitoring, remote access, and networking.

-

This separation of concerns fosters simpler collaboration and consistent deployments across multiple environments (development laptops, QA servers, HPC clusters, etc.).

Why Docker Is (Not Always) Ideal for HPC

-

Root Access & Security Docker’s daemon runs with elevated privileges, which many HPC centers disallow for security reasons.

-

HPC-Specific Hardware Docker does not natively integrate with HPC resource managers (e.g., Slurm, PBS) or specialized interconnects (e.g., InfiniBand) without additional configuration.

-

MPI & Multi-Node Workloads Running large-scale MPI jobs across multiple nodes with Docker can be cumbersome, requiring custom networking and environment tweaks.

Despite these drawbacks, Docker remains very popular for development and testing on local machines. You can then migrate or adapt Docker images for HPC-oriented runtimes like Apptainer.

Apptainer (Formerly Singularity)

Apptainer (originally Singularity) is a container platform built with HPC in mind. Key highlights include:

-

Rootless Execution: Containers operate as the user who launches them, preventing privilege escalation.

-

Seamless Integration with HPC Schedulers: Compatible with Slurm, PBS, LSF, etc., without requiring a persistent daemon.

-

Native MPI & GPU Support: Automatically binds host MPI libraries and GPU drivers into the container.

-

SIF Format: Stores the container as a single file (SIF), simplifying distribution and cryptographic signing.

Comparing Docker and Apptainer

| Feature | Docker | Apptainer |

|---|---|---|

Execution Model |

Client-server daemon (root-based) |

Rootless (user-mode) |

HPC Integration |

Requires extra steps for MPI, batch schedulers, GPUs |

Built-in MPI, GPU, scheduler support |

Security Model |

Daemon runs as root; user must be in the |

Minimal privilege escalation; user runs containers |

Image Format |

Layered images (UnionFS) from Docker Hub |

Single-file SIF images (pullable from Docker/OCI registries) |

Typical Usage |

General development, CI/CD, microservices |

HPC research, multi-tenant secure clusters |

Container Registries

Container registries store and distribute images:

-

Docker Hub: Public and private repositories > Docker Hub

-

GitHub/GitLab Registries: ghcr.io, registry.gitlab.com

-

NVIDIA NGC: GPU-focused images

-

Local or Institutional Registries: On-premise solutions for secure HPC environments

Benefits of using a registry:

-

Version Control: Tag images (e.g.,

v1.0,v2.0) for reproducible environments -

Collaboration: Team members can pull the same image

-

Deployment: Easy retrieval of images on HPC systems (if allowed by policy)

Key Takeaways

-

Docker is ubiquitous, user-friendly for local development, but not always HPC-friendly due to security and multi-node concerns.

-

Apptainer is purpose-built for HPC, offering rootless execution and seamless MPI/GPU support.

-

Registries enable consistent, versioned sharing of container images across laptops, Tier-n clusters, and supercomputers.

|

A common workflow is to build and test with Docker locally, push to a registry, then pull the image into Apptainer on HPC systems for production runs. See the hands-on section for practical examples of running containers in an HPC environment. |

Q&A and Discussion

-

Open floor for questions.

-

Discussion on participants’ HPC environments and container use cases.

-

Share experiences and challenges with containerized HPC workflows.

References & Further Reading

-

Singularity/Apptainer documentation: apptainer.org/

-

Docker documentation: docs.docker.com/

-

Additional HPC container best practices:

-

Kurtzer, G. M., Sochat, V., & Bauer, M. W. (2017). Singularity: Scientific containers for mobility of compute. PLOS ONE, 12(5), e0177459. DOI: doi.org/10.1371/journal.pone.0177459

-

NERSC – Containers in HPC: Training Event (March 2025) – NERSC Training Event

-

Carlos Arango, Rémy Dernat, John Sanabria. Performance,Evaluation of Container-based Virtualization for High Performance Computing Environments. 2024. DOI: hal.archives-ouvertes.fr/hal-04795161

-

* Keller Tesser, Rafael and Borin, Edson – Containers in HPC: a survey (2022) – DOI

-

-

EuroHPC offers a comprehensive portal with detailed information on HPC initiatives and training resources.

-

Official website: www.eurohpc-project.eu/

-

Training documentation: Available through the EuroHPC portal and affiliated training programs.

-

.pdf

.pdf